Ever felt like math is a language you just can’t solve?

Well, you’re not alone.

But today, I’m here to change that. We’re going to explore the world of vector embeddings – sounds fancy, right? But trust me, it’s simpler than you think.

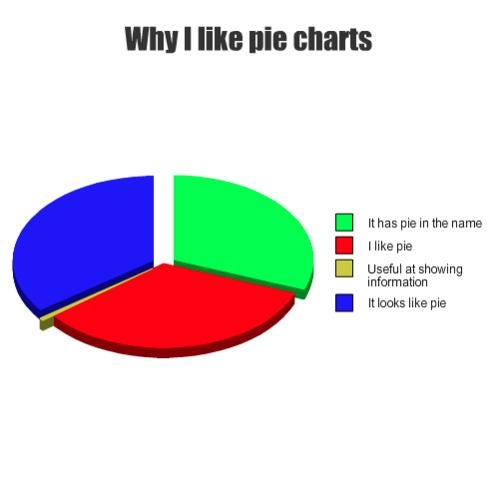

Forget the days of daunting equations and numbers; we’re about to make math as easy as pie (chart).

Let’s talk Vector Embeddings

Before you think it’s too complex, let me assure you, it’s surprisingly not that hard to understand it.

And once you do, it will help you understand and design AI tools and solutions even better.

Today, we’re not just learning about it; we’re decoding it into everyday language. So, let’s jump in and turn you into a fan of this mathematical marvel!

Starting Simple: The World of One Dimension

Picture a straight line, stretching infinitely in both directions.

Now, imagine a point on this line, let’s name it Point Z. Where is Z located? Easy! It’s just a number away from the origin. Say Z is 4 units to the right. In math speak, this is a “1-dimensional space,” where a single number nails down any point’s location.

Introducing Another Player: The Second Dimension

Let’s level up! Add another axis perpendicular to our original line. Now we’ve got two dimensions. To locate a point here, say Point Y, we need two numbers. One for each axis, like (4, 3).

It’s just like adding points on a graph in school.

Quick Recap:

- In a 1D space (one line), one number defines each point (x = 4)

- In a 2D space (two lines crossing), two numbers are necessary. (x = 4, y = 3)

The Leap into Three Dimensions

This is where most of us feel at home. Our world is 3D, after all. To specify a point here, like Point X, we use three numbers (like 4, 3, 5).

These numbers represent coordinates in our three-dimensional space.

The Conceptual Jump: Beyond Three Dimensions

Brace yourself! Imagine a space with more dimensions – 4, 5, even 100. It’s hard to visualize, but mathematically, it’s a cakewalk. In a 100-dimensional space, for instance, a point is pinpointed by 100 numbers!

Vector Embeddings: Connecting Dots in N-Dimensional Space

Now, let’s connect this to vector embeddings. Imagine each point in these multi-dimensional spaces is not just a dot but a data point, representing something complex, like a word or a sentence.

Vector Embeddings in AI

Consider a scenario in AI: ada-003, an imaginary vector embedding model by OpenAI, which maps text into a space with, say, 2048 dimensions. Here, each sentence is represented by 2048 numbers, capturing its essence.

Practical Application: Vector Embeddings and Semantic Search

The real magic of vector embeddings lies in semantic search. Think of searching not just by keywords but by the meaning or context of your query. For instance, looking for information on “innovative fruit-based technologies” might lead you to discussions about Apple Inc.

Wrapping Up: The Power of Vector Embeddings

So, there you have it – a journey from simple lines to a complex, multi-dimensional understanding of vector embeddings.

It’s not just math; it’s a doorway to a whole new world of understanding data and AI.

Stick around and subscribe, and next time, we’ll explore how vector embeddings power cutting-edge technologies in AI!

Source & inspiration: Agent.ai