On February 2025 Andrej Karpathy, one of the most influential educators and engineers in modern AI, and a founding member of OpenAI, published a 3 and a half hours long video “Deep Dive into LLMs like ChatGPT” where he explains why it is essential viewing for anyone working in AI and introduces the man behind it.

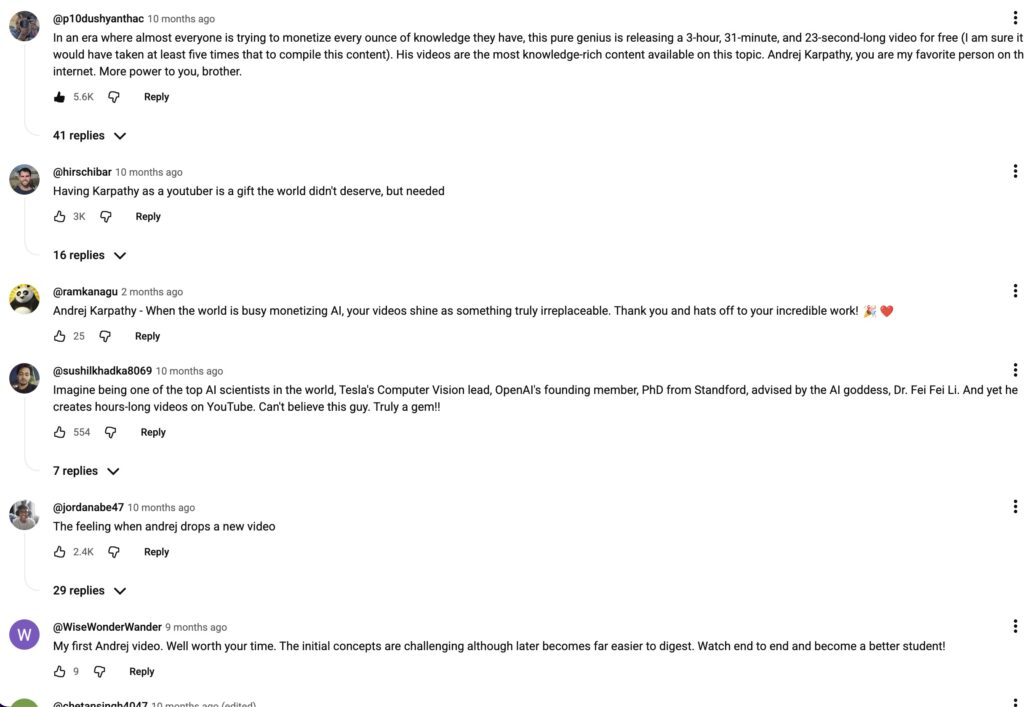

noxnoxnoxnoxnoxHere are some of the comments below the video:

noxnoxnoxnoxnox

Karpathy’s three and a half hour lecture is a guided tour through the entire lifecycle of large language models (LLMs) — from data collection and tokenization to post‑training reinforcement.

noxnoxnoxnoxnoxThe video is, despite very technical background, very easy to understand and is providing mental models that help reason about what LLMs are and how they actually work.

noxnoxnoxnoxnoxBecause LLMs now underpin many AI products and research directions, understanding their training pipeline and limitations has become as essential as knowing how to code.

noxnoxnoxnoxnoxWatching the full video helps AI professionals and enthusiasts with this general, but very fundamental understanding and highlights both the magic and the sharp edges of today’s language models.

noxnoxnoxnoxnoxBefore we jump into the video, lets first answer the question

noxnoxnoxnoxnoxWho Is Andrej Karpathy?

noxnoxnoxnoxAndrej Karpathy is one of the most influential educators and engineers in modern AI.

noxnoxnoxnoxnoxHe was a founding member of OpenAI, later served as Director of AI at Tesla, and led the development of the Autopilot computer‑vision system.

noxnoxnoxnoxnoxDuring his PhD at Stanford, he co‑designed and taught the university’s first deep‑learning course, CS231n, which has since become one of the most popular machine‑learning courses in the world. Time magazine describes him as a key figure whose online lectures have reached millions and notes that he recently launched Eureka Labs, an AI‑native education platform, to bring AI‑assisted teaching tools to students.

noxnoxnoxnoxnoxKarpathy’s teaching style is strongly influenced by the physicist Richard Feynman; he prioritizes clarity and builds intuition from the ground up. He often publishes free lectures, code examples (e.g., micrograd, nanogpt), and open‑source tools that demystify complex AI concepts.

noxnoxnoxnoxnoxThis free and high quality contributions has made him an anchor of the AI community and earned him a reputation for making cutting‑edge research accessible.

noxnoxnoxnoxnoxWhat the Video Covers?

noxnoxnoxnoxKarpathy structures the lecture around the full LLM pipeline, with careful explanations of both the underlying mathematics and the practical engineering trade‑offs.

noxnoxnoxnoxnoxBelow is an outline of key topics he covers;

noxnoxnoxnoxnoxIntroduction and Mental Models

noxnoxnoxnoxLLMs are, at their core, next‑token predictors trained to maximize likelihood over huge corpora. He emphasizes that while LLMs can feel magical, they are not sentient; they operate by predicting the most probable continuation of a sequence.

noxnoxnoxnoxnoxHe introduces the idea of prompting as programming: the user writes a specification in natural language, and the model generates a completion. Understanding prompts as instructions helps demystify why specific phrasing can dramatically change outputs.

noxnoxnoxnoxnoxPre‑training: Data, Tokens and Transformers

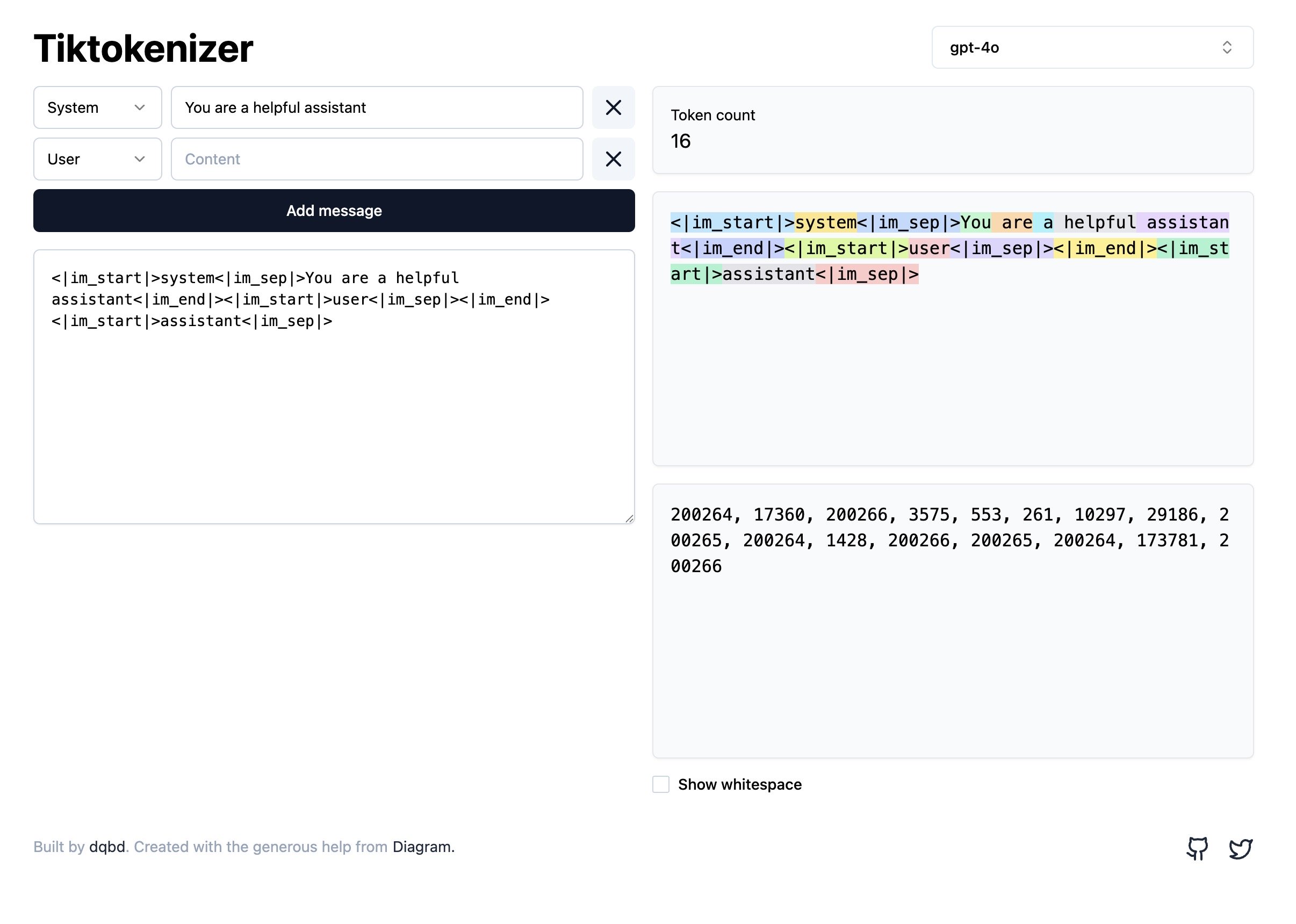

noxnoxnoxnoxThe first stage involves ingesting vast amounts of internet text. Karpathy breaks down how text is broken into tokens and fed into a transformer architecture. He explains attention mechanisms intuitively and demonstrates how context length (e.g., 4k, 8k, 128k tokens) affects capability. This stage teaches the model general knowledge but also inherits biases and noise from the source data.

noxnoxnoxnoxnoxFine‑tuning and Safety

noxnoxnoxnoxNext, he discusses supervised fine‑tuning, where the model is trained on curated question‑answer pairs, code examples and chat logs. This step aligns the model with a desired persona (e.g., helpful assistant), reduces toxicity and improves factual accuracy. It also introduces safety guardrails and specialized capabilities, such as code generation.

noxnoxnoxnoxnoxReinforcement Learning from Human Feedback (RLHF)

noxnoxnoxnoxKarpathy provides an accessible overview of RLHF/RLAIF, where a reward model is trained to prefer better responses. The LLM then learns to maximize this reward via reinforcement learning, leading to more coherent reasoning and safer outputs. He notes that the process is iterative and expensive but necessary to push models beyond mere mimicry.

noxnoxnoxnoxnoxEvaluation and Limitations

noxnoxnoxnoxThroughout the lecture, Karpathy is candid about limitations: hallucinations (confidently wrong statements), brittle reasoning on complex tasks, difficulties with long‑horizon planning and the inherent unpredictability of large autoregressive models. He discusses strategies like prompt engineering, self‑reflection and retrieval‑augmented generation (RAG) to mitigate these issues. He also touches on ethical concerns around misuse, bias and the environmental cost of training.

noxnoxnoxnoxnoxTools, Demos and Code

noxnoxnoxnoxTrue to his Feynman‑inspired approach, Karpathy intersperses the talk with live coding demos using his nanoGPT implementation, showing how to build a tiny GPT from scratch. He demonstrates tokenization, training loops, sampling and how scaling up data and parameters leads to emergent abilities. These demos provide practitioners with a concrete starting point for experimentation.

noxnoxnoxnoxnoxWhy Should Watch It, specially if you work in AI?

noxnoxnoxnoxThe lecture translates dense research papers into intuitive analogies and code. Even seasoned researchers will find new ways to explain LLM concepts to colleagues and stakeholders.

noxnoxnoxnoxnoxMany of people who work with AI, use models via APIs without fully understanding how they were created.

noxnoxnoxnoxnoxKarpathy connects the dots between data, architecture, training and deployment, fostering a systems‑level view.

noxnoxnoxnoxnoxThe talk highlights sharp edges — hallucinations, prompt injection, misalignment — and offers practical debugging techniques. In the video Andrej explains recent advances, such as reinforcement‑learning improvements and scaling trends.

noxnoxnoxnoxnoxIt is rare for a well‑known AI researcher to share such a comprehensive overview for free.

noxnoxnoxnoxnoxWatching the video also supports the ethos of open knowledge sharing.

noxnoxnoxnoxnox