BuboGPT is a visionary AI model that can not only understand text but also sees and hears. In essence, this AI brings together text, vision, and audio under one umbrella.

The poster child for this AI revolution over the last years has undoubtedly been the Large Language Models or LLMs. Of these, ChatGPT stands as a testament to the strides we’ve made in natural language processing, earning a spot in our day-to-day digital interactions.

But, what if the next big shift is already here? One that allows this text-savvy AI to not just ‘read’ but also see 👀 and hear👂?

Beyond Just Text – The New Frontier

While LLMs have been crushing it in the text domain, some tech wizards felt they were being held back by only understanding text.

The idea?

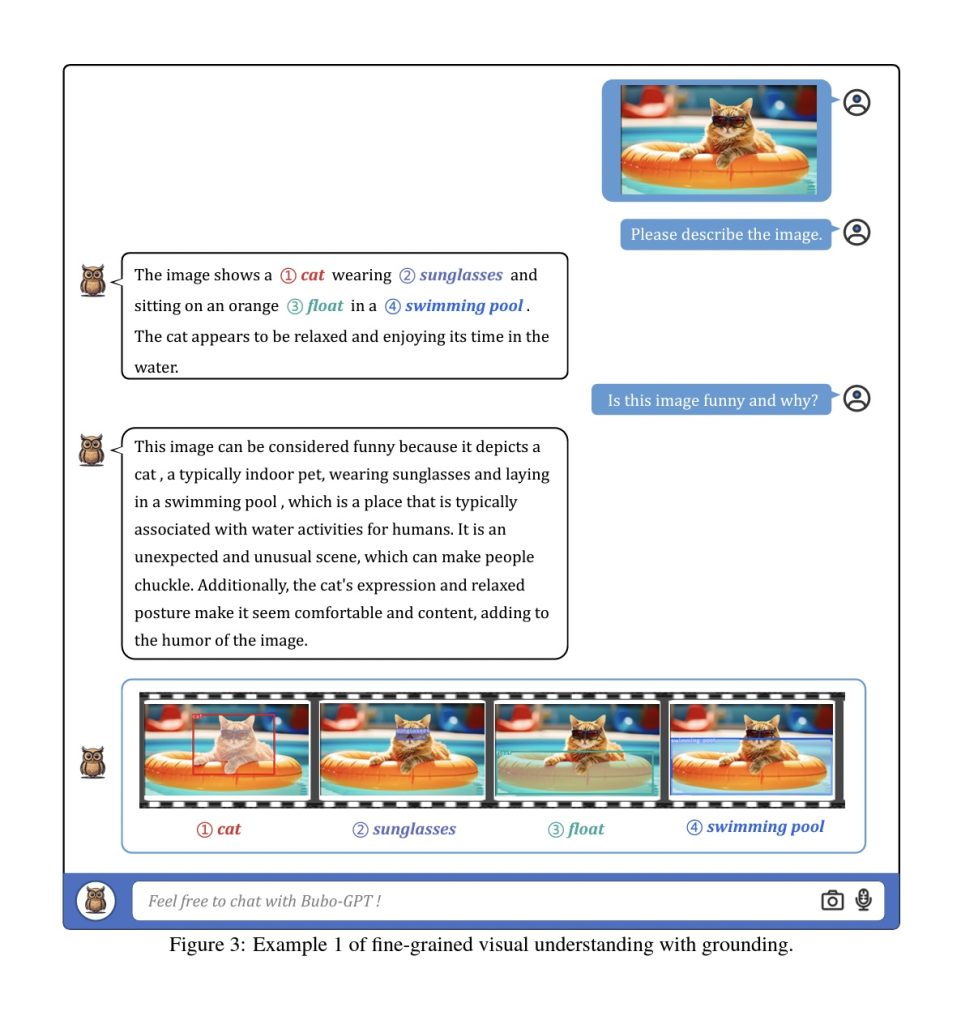

To break the boundaries and teach LLMs to understand images, videos, and even audio. But it’s one thing to recognize an image and another to truly understand the context and relationships between different visual elements.

That’s where BuboGPT steps in, offering a solution to this challenge.

BuboGPT: Visual Grounding in Multi-Modal LLMs

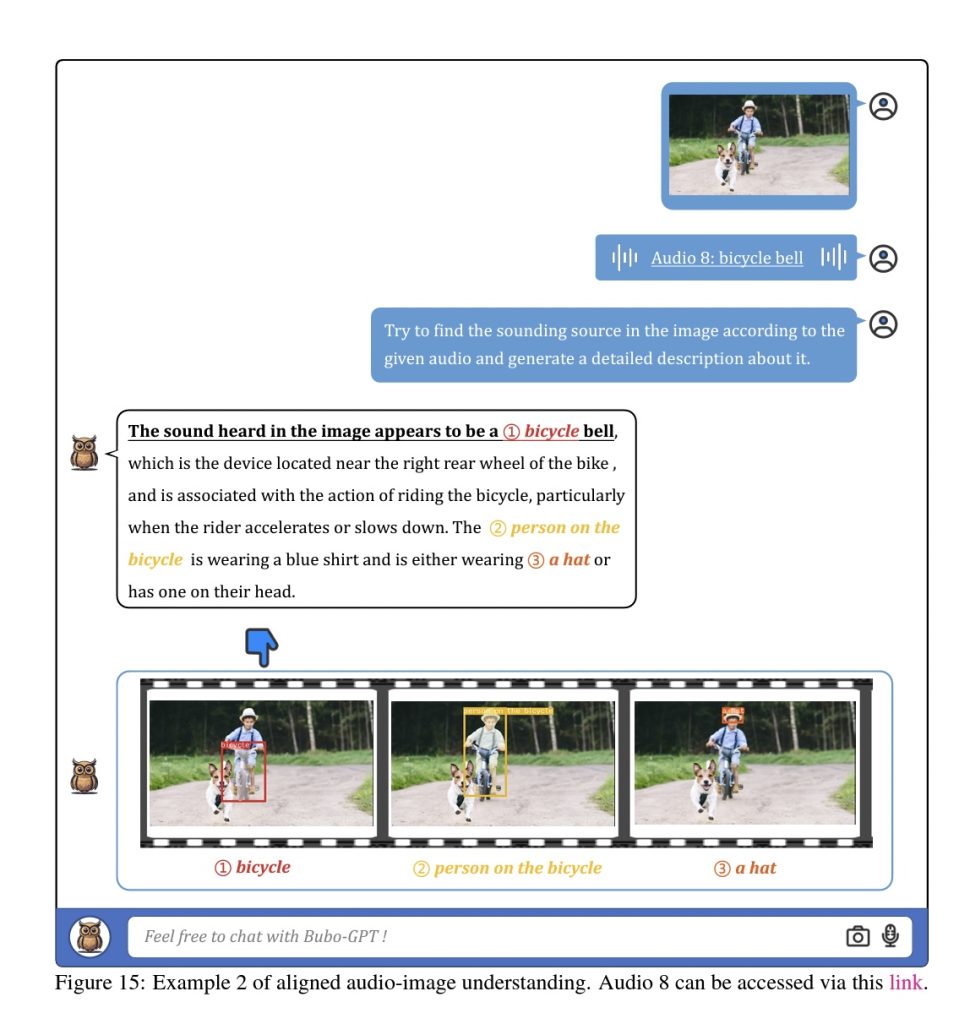

Imagine a chatbot that can describe a photo, interact based on a video, or even comment on a piece of music you like.

BuboGPT is the first AI model designed to do just that! It’s not just about recognizing images or audio; it’s about grounding or connecting these modalities in a way that makes sense.

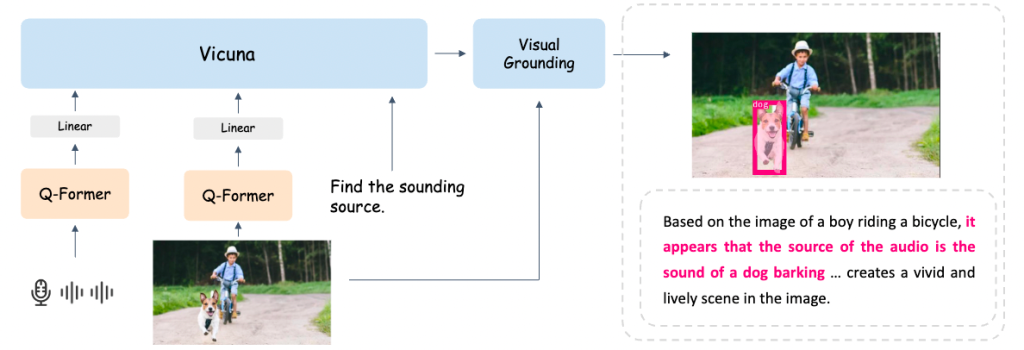

How Does BuboGPT Work?

BuboGPT uses a neat trick called visual grounding, which is no walk in the park. It has a step-by-step process:

- Tagging: Labeling parts of an image.

- Grounding: Finding specific regions in the image for each label.

- Entity-Matching: Using LLM logic to connect the dots between tags and image descriptions.

By using this method, BuboGPT enhances how it perceives and understands mixed inputs, creating a powerful multi-modal chatbot.

Practical Magic: Use Cases for BuboGPT

With such an advanced tool, the possibilities are vast. Here are some real-world applications:

- Helping the Visually Impaired: Describe images or scenes in real-time.

- Smart Chatbots: Understand and react to images, videos, or audio sent by users.

- E-Learning: Provide explanations or feedback on diagrams, sketches, or recordings.

- Enhanced Search Engines: Understand and search based on images, videos, or audio.

- Customer Support: Offer solutions based on images or videos of user issues.

- Healthcare: Give preliminary assessments based on patient-submitted images or videos.

… and so much more.

BuboGPT is a prime example of how AI is constantly pushing the boundaries. It’s not just about understanding language anymore, but about bridging the gap between various ways of communicating, be it through words, pictures, or sounds. So, next time you’re chatting with an AI, remember – it might just be seeing and hearing right along with you!

Interested in diving deeper? Check out the original paper about theBuboGPT model or visit their Github.